In the field of artificial intelligence, the research on developing the ability of imagination for machines, which enables them to perceive environments and draw pictures, has drawn much attention. Recently, researchers at CRIPAC proposed a new method to generate face images, which can produce over 200,000 virtual images that do not exist in the real world.

This method can effectively reduce the cost of data acquisition in heterogeneous face recognition and make full use of a small quantity of data. With the help of the generated data, the researchers have observed significant performance improvements in a series of challenging face recognition applications, such as NIR-VIS recognition, Thermal-VIS recognition, sketch-photo recognition, and pose-invariant recognition.

The paper has now been accepted as Spotlight at NeurIPS2019, one of the top international academic conferences in artificial intelligence. It received 6,743 submissions and finally got 1,428 papers accepted (including 36 Oral and 164 Spotlights). The acceptance rate of Oral+Spotlights is only 2.9%.

I. Background

Heterogeneous face recognition has a wide range of applications in real-world applications. However, it also faces many challenges. For example, the NIR sensors are robust to illumination variations and can image even in dark environments. Therefore, mainstream mobile phone manufacturers (such as Apple, Huawei, Xiaomi, etc.) use NIR-based face recognition technology. However, heterogeneous face recognition has not been well addressed due to the huge gap between the NIR and VIS data and the shortage of paired heterogeneous data.

In recent years, high-quality image generation technology has brought a new thought to solve the heterogeneous face recognition problem. However, previous generative methods use conditional image generation to achieve the translation between image domains. Such methods face two major limitations (taking NIR-VIS heterogeneous data as an example) :

(1) Lack of diversity. Given one NIR image, the conditional image generation methods can only synthesize one VIS image. That is, these methods can only synthesize a small amount of data. In addition, compared with the original NIR image, the synthesized VIS image has the same properties (such as posture and expression) except for the spectral information change. This results in limited intra-class diversity between the generated and original NIR data.

(2) Difficulty in maintaining identity information. The conditional image generation method requires the generated VIS image to maintain exactly the same identity as the original input NIR image. However, due to the lack of effective constraints on intra-class and inter-class distances, identity information is difficult to preserve.

II. Proposed Method

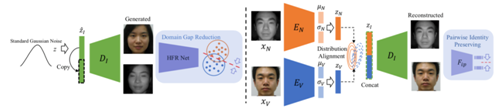

Figure 1 shows the purpose of our proposed Dual Variational Generation (DVG) model. The dual generation model is an unconditional generation model which can reduce the domain difference in heterogeneous face recognition networks by generating large-scale paired data from noise distribution. To achieve this, we have carefully designed a dual variational autoencoder. Given a pair of heterogeneous face images with the same identity, the dual variational autoencoder learns the joint distribution of the paired heterogeneous data in a latent space. In order to ensure identity consistency, we use the distribution alignment loss and pairwise identity preservation loss in the latent space and pixel space, respectively.

In this way, we endow machines with the ability to imagine. As shown in Figure 2, the generated paired heterogeneous data have certain differences in the pose, expression, and other attributes. So, the generated virtual data has rich intra-class diversity. In addition, unlike the conditional image generation methods, the proposed model no longer requires the generated data in a specific category but only restricts the identity consistency between the generated paired heterogeneous data.

III. Applications

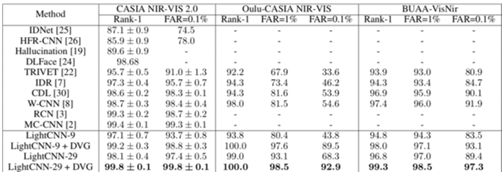

1. NIR-VIS recognition. We verified the effectiveness of the dual generative model on three NIR-VIS databases: CASIA NIR-VIS 2.0, Oulu-Casia NIR-VIS, and BuAA-VisNir. Figure 2 shows that our generated data has high in-class diversity, such as pose and expression. The quantization results in Table 1 show that significant recognition performances are obtained. For example, on the Oulu-Casia NIR-VIS database, VR@FAR=0.1% increases by 24.6%. Our method achieves the best recognition performance on three NIR-VIS databases.

2. Thermal-VIS recognition. As Thermal images can capture Thermal radiation in dark environments, they are widely deployed in wearable devices, watchtowers, and checkpoints. Many research institutions, including the US Army Laboratory, are actively exploring how to improve the accuracy of thermal infrared face recognition. On the Tufts Face Face database, we used the dual generation model for data augmenting (as shown in Figure 3) and improved the RANK-1 accuracy by 17%.

3. Sketch-photo recognition. Sketch-photo synthesis is widely used in the investigation of crime. It can produce a portrait of the suspects based on the description of an eyewitness. Since collecting sketch images is time-consuming and laborious, we use the dual generation model pre-trained on the CUFSF database to generate massive data. After using the generated data, as shown in Figure 3, the metric VR@FAR=1% increased by 16.82%.

4. Profile-frontal face recognition. In real-world applications, there are many face images with extreme poses. As these images have lost abundant effective information, recognizing them is a great challenge. The dual generation model can reduce the intra-class differences and improve the intra-class robustness of the recognition system by generating profile-frontal face pairs. The visualization results on the Multi-PIE database are shown in Figure 3. We improved the rank-1 accuracy on ±90°degrees by 18.5% using the generated data.

5. ID-camera recognition. In the identity recognition system, it is necessary to confirm passengers' identities by using the photos on the ID cards. However, the differences between the photos on the ID cards and taken by the on-site cameras are huge. On the NJU-ID database, using the generated data, we improved VR@FAR=1% by 6.2%.

The above experiments show that the dual generation model can be widely applied to various heterogeneous face recognition tasks. We will explore more applications of the proposed method in the future.

|