Learning Coupled Feature Spaces for

Cross-modal Matching

|

| Unstructured social group activities |

People

Kaiye Wang

Ran He

Wei Wang

Liang Wang

Tieniu Tan

Overview

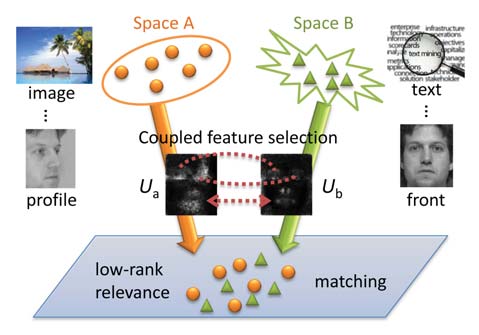

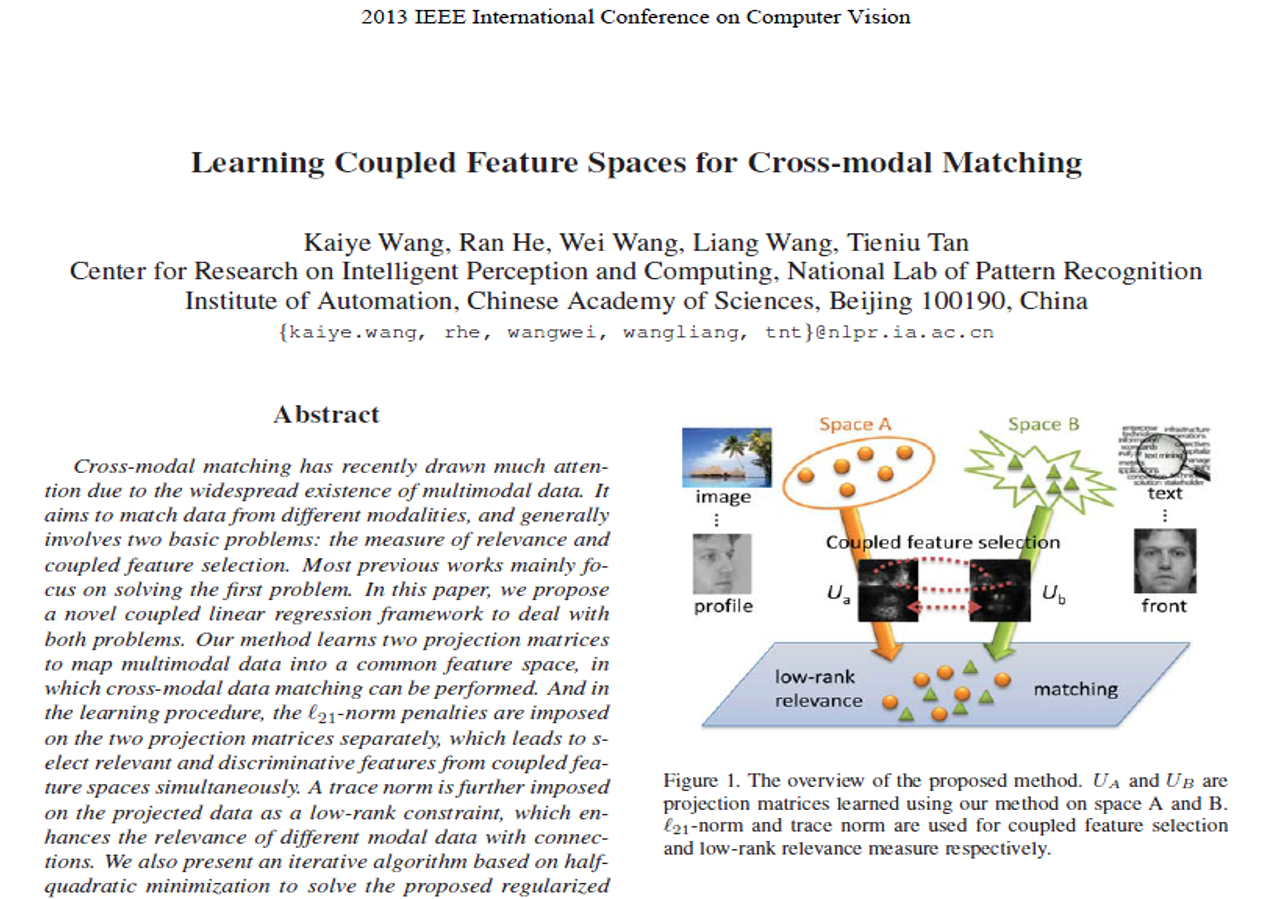

Cross-modal matching has recently drawn much attention due to the widespread existence of multimodal data. It aims to match data from different modalities, and generally involves two basic problems: the measure of relevance and coupled feature selection. Most previous works mainly focus on solving the first problem. In this paper, we propose a novel coupled linear regression framework to deal with both problems. Our method learns two projection matrices to map multimodal data into a common feature space, in which cross-modal data matching can be performed. And in the learning procedure, the l21-norm penalties are imposed on the two projection matrices separately, which leads to select relevant and discriminative features from coupled feature spaces simultaneously. A trace norm is further imposed on the projected data as a low-rank constraint, which enhances the relevance of different modal data with connections. We also present an iterative algorithm based on half-quadratic minimization to solve the proposed regularized linear regression problem. The experimental results on two challenging cross-modal datasets demonstrate that the proposed method outperforms the state-of-the-art approaches.

Paper

|

Experimental Results

|

|

|

||

|

|

|

||

|

|

|

||

| boat+water | ||||

| The top nine images retrieved by our method on the Pascal VOC dataset, given the tags "boat+water" | ||||

|

| Two examples of text queries (the first column) and the top five images (columns 3-7) retrieved by our method on the Wiki dataset. The second column contains the paired images of the text queries. |

Acknowledgments

This work is jointly supported by National Basic Research Program of China (2012CB316300), National Natural Science Foundation of China (61175003, 61135002, 61202328, 61103155), and Hundred Talents Program of CAS.